CSA STAFF NOTICE AND CONSULTATION 11-348 THE AI REVOLUTION: REDEFINING CANADIAN SECURITIES LAW

PART 1 – OVERARCHING THEMES

Brian Koscak, PCMA Vice Chair

On December 5, 2024, the Canadian Securities Administrators (the CSA) published CSA Staff Notice and Consultation 11-348 – Applicability of Canadian Securities Laws and the Use of Artificial Intelligence Systems in Capital Markets (the Notice).

This article is the first in a three-part series consisting of:

- Part 1 – Overarching Themes, which discusses the overarching themes identified by the CSA, including technology neutrality in securities regulation, artificial intelligence (AI) governance and oversight, the explainability requirements, disclosure obligations, and management of AI-related conflicts of interest;

- Part 2 – Registrant Guidance, which discusses the Notice’s specific application to Registrants (e., dealers, advisors and investment fund managers (IFMs) (and the reporting issuer investment funds they manage); and

- Part 3 – Non-Investment Fund Reporting Issuer Guidance, which discusses how the Notice impacts non-investment fund reporting issuers.

A. Purpose

The purpose of the Notice is to provide clarity and guidance on how Canadian securities law applies to the use of “AI systems” by Market Participants including registrants, non-investment fund reporting issuers, marketplaces, marketplace participants, clearing agencies, matching service utilities, trade repositories, designated rating organizations and designated benchmark administrators (collectively, Market Participants).

This Notice outlines selected requirements under Canadian securities law that Market Participants should consider during an AI system’s lifecycle and provides guidance on how the CSA interprets applicable Canadian securities law in this context. In addition, the CSA provided consultation questions to seek feedback from stakeholders on the use of AI systems in the capital markets, which questions are set out at the end of the article.

B. AI system lifecycle

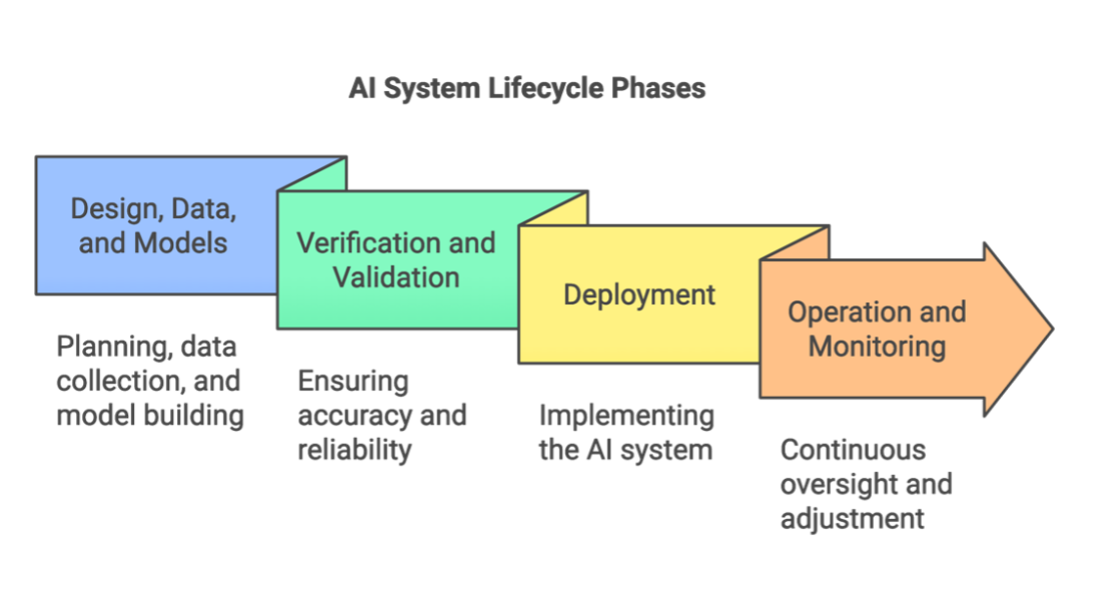

The Notice outlines selected requirements under Canadian securities law that Market Participants should consider during an AI system’s lifecycle. For clarity, an “AI system lifecycle” involves four phases which is also illustrated in the diagram below.

The first phase, ‘design, data and models,’ is a context-dependent sequence that encompasses the foundational elements of planning and design, data collection and processing, and model building. This initial phase sets the groundwork for the entire system’s development and functionality.

The second phase focuses on ‘verification and validation,’ where the system undergoes thorough testing and evaluation to ensure it meets all necessary requirements and performs as intended. This crucial step helps identify potential risks and ensures compliance with regulatory standards.

The third phase, ‘deployment,’ involves the actual implementation of the AI system into the operational environment, marking the transition from development to practical application. This phase requires careful attention to integration with existing systems and establishment of proper controls.

The final phase, ‘operation and monitoring,’ represents the ongoing maintenance and oversight of the system once it’s in active use. This phase is crucial for ensuring the system continues to perform effectively and remains compliant with regulatory requirements over time. Regular monitoring and adjustments are essential components of this phase.

It is important to note that these phases are not always sequential, but instead often occur iteratively, meaning they may repeat or happen concurrently. The CSA’s guidance and request for comment, and your consideration of their questions posed in the Notice, may be impacted by the stage of the AI system lifecycle.

C. The CSA’s Goal in Publishing the Notice

By publishing the Notice, the CSA aims to proactively regulate AI in financial markets, fostering innovation while protecting investors. The CSA aims to support an environment where AI can enhance market efficiency and investor experience while managing potential risks. Supporting responsible Canadian AI development is the goal, while mitigating systemic risks and upholding fair, transparent markets.

As the CSA states in the Notice,

“We strive to support an environment where the deployment of AI systems enhances the investor experience while the risk of investor harm is addressed; where markets can benefit from potential efficiencies and increased competition brought on by the use of AI systems; where capital can be invested in Canadian companies developing AI systems responsibly; and where any new types of risks, including systemic risks, are appropriately addressed.” (2024), 47 OSCB 9307

D. Prior CSA Publications on AI and the Canadian Capital Markets

The CSA seeks to harmonize its approach to AI regulation both domestically and internationally. Examples of recent CSA member publications on AI and the capital markets include:

- Artificial Intelligence in Capital Markets: Exploring Use Cases in Ontario

- Artificial Intelligence and Retail Investing: Scams and Effective Countermeasures

- Artificial Intelligence and Retail Investing: Use Cases and Experimental Research

- Issues and Discussion Paper – Best Practices for the Responsible Use of AI in the Financial Sector

The CSA is also collaborating internationally with organizations like the International Organization of Securities Commissions (IOSCO) to align global standards. The Notice states that IOSCO’s FinTech Taskforce is actively exploring the risks and opportunities of AI in capital markets and developing tools and recommendations for its members.

E. Focus of this Article

The Notice is divided into three parts:

- Overarching themes – the general overarching themes that apply to the use of AI systems in capital markets across Market Participants;

- Identification and application of securities laws – the identification of specific securities laws and guidance and how they apply to various Market Participants in Canada; and

- Consultation questions – the Notice has 10 consultation questions, as set out at the end of this article, where the CSA requests stakeholder feedback on their use of AI systems in the capital markets. The CSA seeks the industry’s feedback no later than March 30, 2025.

F. Overarching Themes Relating to the Use of AI Systems

The various overarching themes identified by the CSA are as follows:

a. Technology & Securities Regulation

The CSA’s basic principle is that securities laws are technology-neutral; however, not all technologies are treated the same. It’s like applying the same health and safety regulations to both a home kitchen and a commercial restaurant. While the overarching principles, such as cleanliness and food safety, remain consistent, a commercial kitchen requires additional protocols like inspections and specialized equipment standards because of its scale and complexity. Similarly, while securities laws apply to all technologies, AI introduces unique challenges, such as algorithmic transparency, bias, and data security, requiring specific approaches to ensure these risks are addressed, while maintaining investor protections.

The Notice emphasizes that it’s not the technology itself being regulated, but the activities being conducted through that technology. This distinction is crucial because it allows the regulatory framework to adapt as AI systems and technology evolves. The CSA isn’t trying to regulate algorithms; they are regulating what those algorithms do in the context of securities markets.

Perhaps most importantly, the CSA points out that principles-based securities legislation can accommodate technological innovation. The existing frameworks can be flexible enough to accommodate new technologies while maintaining their protective function.

b. AI Governance & Oversight

The CSA states that governance and risk management should be a top priority for Market Participants when deploying AI systems. They’re expecting robust oversight without mandating specific technologies or structures. The requirement for a “human-in-the-loop” is interesting; it’s not just about having someone watch the machines, rather it’s about ensuring meaningful human oversight.

The CSA outlines specific elements that should be included in AI governance frameworks, from initial planning and design to ongoing monitoring and validation. It’s a comprehensive approach that recognizes AI systems aren’t static tools, but evolving entities that require continuous oversight. The emphasis on AI literacy among users is noteworthy, as the CSA is saying it’s not enough to have the technology, you need people who understand how to use it responsibly.

Simply, the CSA isn’t just saying “govern your AI”, they’re providing a roadmap of sorts for how to do it. The Notice provides specific, actionable steps for building AI governance frameworks, addressing important aspects ranging from the accuracy of data used to the careful supervision of third-party service providers.

c. Explainability

The CSA basically says that if you can’t explain how your AI made a decision, you probably shouldn’t be using it. In a world where many AI systems generate their search results without showing their work, the CSA requires transparency. The CSA states that an AI system’s transparency depends on how openly its inner workings are shared. As the CSA states,

“A transparent AI system provides clear information about its architecture, data sources, and algorithms. However, transparency alone doesn’t guarantee that the system’s outputs are easily understood by humans. While a system can be transparent but still have low explainability (complex and hard-to-interpret decisions), a model with high explainability inherently promotes transparency. This is because explainable models make it easier for users to understand the process by which their output was generated, thereby increasing trust and confidence in the AI system.”

The CSA expects Market Participants to prioritize explainable AI even when more opaque systems might offer better performance.

The explainability theme tackles head-on the challenge of “black box” AI systems (i.e., those whose decision-making processes are so complex they’re effectively incomprehensible to humans). The CSA’s position is clear: “… the need for advanced capabilities must be balanced against the need for explainability”. It’s an approach that recognizes the trade-off between AI sophistication and transparency, and comes down firmly on the side of understanding over raw performance.

What makes this theme significant is its implicit challenge to the financial technology industry. By insisting on explainability, the CSA is effectively telling developers and institutions that they need to build different kinds of AI systems, ones that prioritize transparency and interpretability from the ground up.

It remains to be seen how stakeholders will respond to this CSA requirement, particularly as the CSA appears to restrict AI systems to executing rule-based algorithms, without seeming recognizing their potential to interpret and make decisions in a less than transparent way.

d. Disclosure

The CSA’s approach to disclosure represents a direct challenge to the “AI washing” occurring in certain parts of the financial markets. Like its environmental counterpart “greenwashing”, AI washing involves making inaccurate, false, misleading, or embellished claims about AI capabilities to attract investors. The CSA requires specific, accurate disclosures about how AI is actually being used, not just vague promises about “AI-powered” solutions.

This theme’s requirements go beyond simple truth-in-advertising. The CSA is expecting that Market Participants provide investors with enough information to understand not just that AI is being used, but how it’s being used and what risks that usage entails. It’s a level of transparency that could change how financial institutions market their AI capabilities.

Perhaps most importantly, the disclosure requirements extend across all types of communications, from formal offering documents to marketing materials and client agreements. The CSA is effectively saying that any claim about AI usage needs to be backed by substance (i.e., subject to due diligence scrutiny), a requirement that could help separate genuine AI innovation from mere technological window dressing.

e. Conflicts of Interest

The CSA’s treatment of AI-related conflicts assumes that algorithms can create new types of conflicts that traditional frameworks might miss. From biased data sets to flawed code that favours certain outcomes, AI systems can introduce subtle distortions that might not be immediately apparent to human overseers.

What makes this theme particularly powerful is its recognition that AI-related conflicts require new monitoring approaches. The Notice recommends specific mechanisms for detecting and managing these conflicts, including the use of AI systems to monitor other AI systems, a sort of technological checks and balances. It’s a sophisticated approach that recognizes both the complexity of AI-related conflicts and the potential for technological solutions.

The requirements for managing conflicts goes beyond disclosure or avoidance. The CSA is expecting active monitoring and management of AI-related conflicts. The CSA wants to make sure that automated systems don’t make decisions that systematically favour the interests of Market Participants over their clients/investors.

Next Steps

The Notice represents a significant step in adapting securities regulation to the AI era, establishing foundational principles through its overarching themes of technology-neutral regulation, robust governance, explainability requirements, transparent disclosure, and conflict management. These themes set the stage for more detailed guidance specific to different Market Participants.

Part 2 of this series discusses how the Notice specifically applies to dealers, advisers and IFMs.

The CSA’s consultation period ends on March 30, 2025. The consultation provides an opportunity for industry stakeholders to have input into the evolution of AI regulation in the Canadian capital markets. Through this consultation process, the CSA seeks to foster responsible AI innovation while maintaining market integrity and investor protection. The consultation questions are set out below.

CSA Notice Consultation Questions

1. Are there use cases for AI systems that you believe cannot be accommodated without new or amended rules, or targeted exemptions from current rules? Please be specific as to the changes you consider necessary.

2. Should there be new or amended rules and/or guidance to address risks associated with the use of AI systems in capital markets, including related to risk management approaches to the AI system lifecycle? Should firms develop new governance frameworks or can existing ones be adapted? Should we consider adopting specific governance measures or standards (e.g. OSFI’s E-23 Guideline on Model Risk Management, ISO, NIST)?

3. Data plays a critical role in the functioning of AI systems and is the basis on which their outputs are created. What considerations should Market Participants keep in mind when determining what data sources to use for the AI systems they deploy (e.g. privacy, accuracy, completeness)? What measures should Market Participants take when using AI systems to account for the unique risks tied to data sources used by AI systems (e.g. measures that would enhance privacy, accuracy, security, quality, and completeness of data)?

4. What role should humans play in the oversight of AI systems (e.g. “human-in-the-loop”) and how should this role be built into a firm’s AI governance framework? Are there certain uses of AI systems in capital markets where direct human involvement in the oversight of AI systems is more important than others (e.g. use cases relying on machine learning techniques that may have lesser degrees of explainability)? Depending on the AI system, what necessary skills, knowledge, training, and expertise should be required? Please provide details and examples.

5. Is it possible to effectively monitor AI systems on a continuous basis to identify variations in model output using test- driven development, including stress tests, post-trade reviews, spot checks, and corrective action in the same ways as rules-based trading algorithms in order to mitigate against risks such as model drifts and hallucinations? If so, please provide examples. Do you have suggestions for how such processes derived from the oversight of algorithmic trading systems could be adapted to AI systems for trading recommendations and decisions?

6. Certain aspects of securities law require detailed documentation and tracing of decision-making. This type of recording may be difficult in the context of using models relying on certain types of AI techniques. What level of transparency/explainability should be built into an AI system during the design, planning, and building in order for an AI system’s outputs to be understood and explainable by humans? Should there be new or amended rules and/or guidance regarding the use of an AI system that offer less explainability (e.g. safeguards to independently verify the reliability of outputs)?

7. FinTech solutions that rely on AI systems proposing to provide KYC and onboarding, advice, and carry out discretionary investment management challenge existing reliance on proficient individuals to carry out registerable activity. Should regulatory accommodations be made to allow for such solutions and, if so, which ones? What restrictions should be imposed to provide the same regulatory outcomes and safeguards as those provided through current proficiency requirements imposed on registered individuals?

8. Given the capacity of AI systems to analyze a vast array of potential investments, should we alter our expectations relating to product shelf offerings and the universe of reasonable alternatives that representatives need to take into account in making recommendations that are suitable for clients and put clients’ interests first? How onerous would such an expanded responsibility be in terms of supervision and explainability of the AI systems used?

9. Should Market Participants be subject to any additional rules relating to the use of third-party products or services that rely on AI systems? Once such a third-party product or service is in use by a Market Participant, should the third-party provider be subject to requirements, and if so, based on what factors?

10. Does the increased use of AI systems in capital markets exacerbate existing vulnerabilities/systemic risks or create new ones? If so, please outline them. Are Market Participants adopting specific measures to mitigate against systemic risks? Should there be new or amended rules to account for these systemic risks? If so, please provide details.

Examples of systemic risks could include the following:

• AI systems working in a coordinated fashion to bring about a desired outcome, such as creating periods of market volatility in order to maximize profits;

• Widespread use of AI systems relying on the same, or limited numbers of, vendors to function (e.g., cloud or data providers), which could lead to financial stability risks resulting from a significant error or a failure with one large vendor;

• A herding effect where there is broad adoption of a single AI system or where several AI systems make similar investment or trading decisions, intentionally or unintentionally, due, for example, to similar design and data sources. This could lead to magnified market moves, including detrimental ones if a flawed AI system is widely used or is used by a sizable Market Participant;

• Widespread systemic biases in outputs of AI systems that affect efficient functioning and fairness of capital markets.