CSA STAFF NOTICE AND CONSULTATION 11-348 THE AI REVOLUTION: REDEFINING CANADIAN SECURITIES LAW

PART 3 – REPORTING ISSUER GUIDANCE

(non-investment fund reporting issuers)

Brian Koscak, PCMA Vice Chair

This article is Part 3 in a three-part series examining the Canadian Securities Administrators’ (the CSA) publication of CSA Staff Notice and Consultation 11-348 – Applicability of Canadian Securities Laws and the Use of Artificial Intelligence Systems in Capital Markets (the Notice). This article examines the Notice’s specific application to reporting issuers that are not investment funds (Non-IF Issuers), like mining, oil and gas, real estate, biotech and other non-investment fund reporting issuers. It largely involves the disclosure of the use of artificial intelligence (AI) systems by a reporting issuer and their periodic and continuous disclosure obligations under applicable securities law.

Part 1 discussed the overarching themes identified by the CSA, including technology neutrality in securities regulation, AI governance and oversight, explainability requirements, disclosure obligations, and management of AI-related conflicts of interest. You can access Part 1 here.

In Part 2, we discussed the application of AI systems to Canadian securities law in connection with dealers, advisors and investment fund managers (IFMs), and the 81-series funds IFMs manage. You can access Part 2 here.

These series of articles seek to provide certain Market Participants[1] with a comprehensive understanding of how Canadian securities laws regulate “AI systems”[2] in our capital markets and their related obligations.

Specific Guidance for Non-IF issuers

The Notice discusses how Non-IF Issuers can clearly and effectively disclose their use of AI systems to investors. The CSA builds on a company’s existing continuous disclosure obligations under National Instrument 51-102 Continuous Disclosure Obligations, with companies needing to provide updates through their Management’s Discussion and Analysis (MD&A) and Annual Information Form (AIF). Similar requirements apply to prospectus filings, ensuring consistent disclosure, whether you’re buying shares in the open market or taking part in a new offering.

The CSA’s emphasis on accurate, equal, and timely access to material information includes the use of AI. The CSA is essentially saying that in a world where AI capabilities can change rapidly, investors need regular updates about how reporting issuers are using these technologies and what risks they might face. This isn’t just about checking regulatory boxes, it’s about ensuring investors have the information they need to make informed investment decisions.

The CSA states that there’s no “one size fits all” approach to AI disclosure. They recognize that a tech company building AI systems from scratch faces very different disclosure obligations than a retailer using off-the-shelf AI for inventory management. In addition, the CSA states that Non-IF Issuers should provide disclosures proportionate to the materiality of their AI systems and associated risks, avoiding generic boilerplate language. Key disclosure elements should enhance investor understanding by covering several crucial areas: the company’s definition and material use of AI (whether developed internally or externally sourced), significant risks and corresponding governance measures, potential impacts on business operations and financial performance, and any material factors or assumptions underlying forward-looking information about AI system usage, including updates to previously disclosed information.

Non-IF Issuers should note that the CSA will monitor their disclosure filings in relation to their use of AI systems, as part of the CSA’s ongoing continuous disclosure review program.

(a) Disclosure of Current AI Systems Business Use

The CSA’s message is unambiguous for all reporting issuers whose operations involve the use of AI systems or the production of AI-related products: if it is material, you got to talk about it. But the way you talk about it matters just as much as what you say.

Given the complexity of AI systems, reporting issuers need to provide detailed information that helps investors understand what’s happening under the hood. It’s like the difference between a mechanic explaining exactly what’s wrong with your car versus simply saying “there’s an engine problem.”

The CSA provides a seven-point checklist for AI disclosure is as follows:

- Definition: Reporting issuers need to explain how they define AI. This isn’t about copying textbook definitions, it’s about explaining how a reporting issuer understands and approaches AI technology.

- The What: Spell out the nature of a reporting issuer’s AI products or services. What are you actually building or using?

- The How and Why: Explain how the reporting issuer is using AI systems, what benefits is it getting, and what risks is it facing.

- The Impact: Detail current or anticipated impacts of the use or development of AI systems may have on a reporting issuer’s business and financial condition.

- Material Contracts: Disclose any material contracts of a reporting issuer relating to AI systems.

- The Journey: Explain events or conditions that have influenced the reporting issuer’s development, including any material AI investments; and

- The Competitive Advantage: Explain how the reporting issuer’s AI usage affect its competitive position in its primary markets.

Beyond these core requirements, Non-IF Issuers must pull back the curtain on their data sources; whether they’re building in-house or buying AI systems from third parties. The CSA believes this level of transparency could help investors distinguish between reporting issuer’s with genuine AI capabilities and those just riding the AI buzzword wave.

(b) AI-Related Risk Factors

For AI risk factor disclosure, the CSA states that reporting issuers cannot hide behind boilerplate warnings about general technology risks. The Notice states that the CSA expects entity-specific disclosure about how AI may impact the business of a Non-IF Issuer.

The CSA stresses context. Non-IF Issuer can’t just list AI risks; they need to explain how their board and management assess and manage these risks. It’s an expectation that should have Non-IF Issuers think more deeply about their AI governance practices, including accountability, risk management, and oversight mechanisms over the use of AI systems in their business.

For risk disclosure, the CSA focuses on three key elements: where the risks come from, what might happen if they materialize, and what companies are doing to prevent them. The Notice states that Non-IF Issuers to disclose material prior incidents where AI systems have raised regulatory, ethical, or legal concerns.

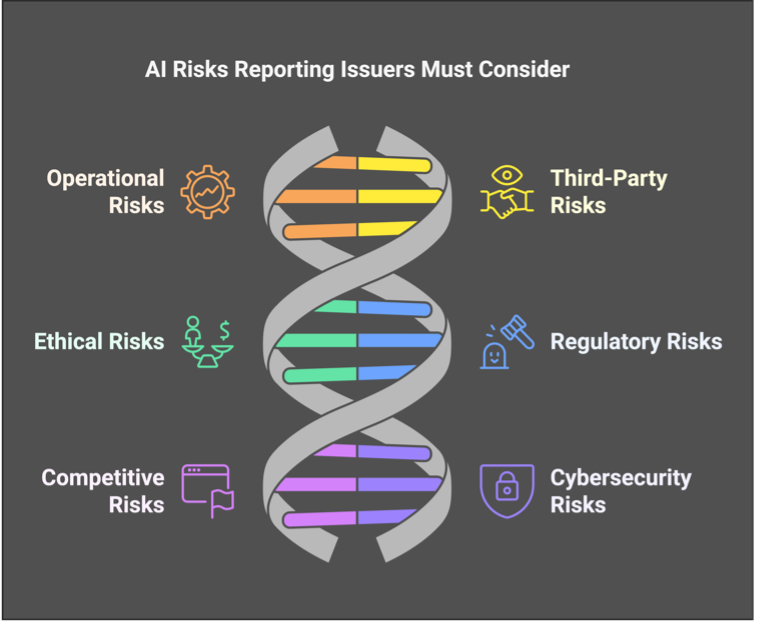

The CSA has identified six major categories of AI risk that reporting issuers should consider and disclose if material:

1. Operational Risks: This isn’t just about system failures. Non-IF Issuers should address everything from unintended consequences and misinformation to bias and technological challenges. They should also tackle thorny questions about data ownership, sourcing, and maintenance of data;

2. Third-Party Risks: In an acknowledgment that Non-IF Issuers may rely on external AI providers, the CSA suggests specific disclosure about the risks of depending on others for AI capabilities;

3. Ethical Risks: Perhaps the most forward-thinking category, this covers social and ethical issues arising from AI use, including conflicts of interest, human rights, privacy, and employment impacts. Reporting issuers need to consider how these issues could affect their reputation, liability, and costs;

4. Regulatory Risks: With AI regulation evolving rapidly, Non-IF Issuers need to explain how changing legal requirements and other standards might affect their AI uses or initiatives;

5. Competitive Risks: The CSA suggests Non-IF Issuers address how rapidly evolving AI technology might threaten their market position (i.e., operations, financial condition and reputation); and

6. Cybersecurity Risks: Last but certainly not least, Non-IF Issuers should address the specific security vulnerabilities that AI systems might introduce to their operations.

(c) Promotional Statements about AI-Related Use (AI washing)

In a pointed section of the Notice, the CSA takes direct aim at the AI hype in corporate communications. Want to claim your company uses AI “extensively”? You better be ready to prove it. Vague and unsubstantiated claims about AI capabilities will not be acceptable.

The CSA reiterates the requirement for balanced disclosure. Companies can’t just trumpet the benefits of their AI initiatives, they need to give equal airtime to the risks and challenges. It’s like requiring weight loss ads to spend as much time discussing potential side effects as they do showing “before and after” photos.

The CSA recognizes that disclosure, including AI, isn’t just about formal filings anymore. Social media posts about AI initiatives could trigger securities reporting obligations, even if they’re not explicitly aimed at investors. The message is clear: whether you’re filing an annual report or tweeting about your latest AI project, the same rules about accurate, balanced disclosure apply.

The Notice stresses the need to disclose unfavorable news as promptly and completely as favorable developments. This isn’t just about preventing selective disclosure, it’s about ensuring investors get a complete, balanced picture of a company’s AI initiatives. The CSA is effectively telling companies they can’t bury bad AI news while amplifying successes.

(d) AI and Forward-Looking Information

The Notice sets out the CSA’s expectations on navigating AI disclosure requirements involving forward-looking information (FLI). When making predictions about the success of your AI initiatives, you need to ensure you have more than just optimistic projections to substantiate your claims; otherwise, your pronouncements may be viewed as a misrepresentation.

The Notice on FLI is specific. Want to tell investors that your new AI system will boost revenues by 5%? Better be ready to show your work. The CSA expects not just the prediction, but all the material factors and assumptions that went into making it to be disclosed too. It’s like requiring weather forecasters to explain not just tomorrow’s temperature, but exactly how they arrived at that prediction.

The CSA reminds Non-IF Issuers of the requirement for ongoing updates to previously disclosed FLI. Reporting issuers can’t just make grand predictions about their AI future and then go silent. They need to keep investors informed about their progress toward those targets and explain any material deviations from previous forecasts. While Non-IF Issuers have the flexibility to disclose updated information in a news release before filing the MD&A, they can’t rely solely on news releases – the information must eventually make its way into their formal filings.

Conclusion: The New Era of AI Corporate Communication

The CSA’s Notice represents how Canadian securities regulators approach AI disclosure in the capital markets. Through this three-part series, we’ve examined how the CSA is adapting existing securities frameworks to address the unique challenges posed by AI systems, from broad regulatory principles to specific guidance for registrants and Non-IF Issuers.

For Non-IF Issuers in particular, the message is clear: meaningful AI disclosure requires careful consideration of materiality, specificity, and ongoing monitoring. The CSA expects companies to move beyond superficial “AI washing” to provide investors with detailed, balanced information about their AI initiatives, associated risks, and potential impacts on their business. Whether discussing current AI implementations, risk factors, promotional statements, or forward-looking information, companies must ensure their disclosures are substantive, accurate, and timely.

As AI continues to transform Canadian capital markets, this regulatory framework provides crucial guidance for market participants while maintaining enough flexibility to adapt to rapidly evolving technology. The challenge for reporting issuers moving forward will be to develop robust disclosure practices that satisfy regulatory requirements while providing investors with the information they need to make informed investment decisions in an AI-driven market landscape.

Next Steps

The CSA’s consultation period ends on March 30, 2025. The consultation provides an opportunity for industry stakeholders to shape the evolution of AI regulation in the Canadian capital markets. Through this consultation process, the CSA seeks to foster responsible AI innovation while maintaining market integrity and investor protection. The consultation questions in the Notice are set out below.

CSA Notice Consultation Questions

- Are there use cases for AI systems that you believe cannot be accommodated without new or amended rules, or targeted exemptions from current rules? Please be specific as to the changes you consider necessary.

- Should there be new or amended rules and/or guidance to address risks associated with the use of AI systems in capital markets, including related to risk management approaches to the AI system lifecycle? Should firms develop new governance frameworks or can existing ones be adapted? Should we consider adopting specific governance measures or standards (e.g. OSFI’s E-23 Guideline on Model Risk Management, ISO, NIST)? [3]

- Data plays a critical role in the functioning of AI systems and is the basis on which their outputs are created. What considerations should Market Participants keep in mind when determining what data sources to use for the AI systems they deploy (e.g. privacy, accuracy, completeness)? What measures should Market Participants take when using AI systems to account for the unique risks tied to data sources used by AI systems (e.g. measures that would enhance privacy, accuracy, security, quality, and completeness of data)?

- What role should humans play in the oversight of AI systems (e.g. “human-in-the-loop”) and how should this role be built into a firm’s AI governance framework? Are there certain uses of AI systems in capital markets where direct human involvement in the oversight of AI systems is more important than others (e.g. use cases relying on machine learning techniques that may have lesser degrees of explainability)? Depending on the AI system, what necessary skills, knowledge, training, and expertise should be required? Please provide details and examples.

- Is it possible to effectively monitor AI systems on a continuous basis to identify variations in model output using test- driven development, including stress tests, post-trade reviews, spot checks, and corrective action in the same ways as rules-based trading algorithms in order to mitigate against risks such as model drifts and hallucinations? If so, please provide examples. Do you have suggestions for how such processes derived from the oversight of algorithmic trading systems could be adapted to AI systems for trading recommendations and decisions?

- Certain aspects of securities law require detailed documentation and tracing of decision-making. This type of recording may be difficult in the context of using models relying on certain types of AI techniques. What level of transparency/explainability should be built into an AI system during the design, planning, and building in order for an AI system’s outputs to be understood and explainable by humans? Should there be new or amended rules and/or guidance regarding the use of an AI system that offer less explainability (e.g. safeguards to independently verify the reliability of outputs)?

- FinTech solutions that rely on AI systems proposing to provide KYC and onboarding, advice, and carry out discretionary investment management challenge existing reliance on proficient

[1] For greater clarity, these series of articles do not discuss the Notice in connection with its guidance for marketplaces and marketplace participants, clearing agencies and matching service utilities, trade repositories and derivative data reporting, designated rating organizations, and designated benchmark administrators.

[2] An “AI system” is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

[3] Office of the Superintendent of Financial Institutions (OSFI) Guideline E-23 on Model Risk Management; International Organization for Standardization (ISO): Standards for artificial intelligence (https://www.iso.org/artificial-intelligence); National Institute of Standards and Technology (NIST) AI Risk Management Framework (https://www.nist.gov/itl/ai-risk-management-framework).